As we discussed in the last blog, Containers are increasingly more popular, but they present security risks that can expose businesses to lost productivity, reduced sales, and even millions of dollars in fines and it affects the reputation of companies. Most organizations are now taking this as a serious matter and are implementing various tools and processes to tackle container security incidents.

We are going to start from the base of this journey. All problems start with the containers, and the containers are built mostly by a DevOps / Developer. Bad practices followed during this stage are the main reason for creating an attack surface for hackers. Let’s see how we can reduce the risks by following some methods that are accepted widely in this container era.

NB: we will be using docker for building images and various demonstration purposes.

Use minimal base images

Container security starts at the infrastructure layer and is only as strong as this layer. Base images are important in the final image size and security index.

Always select a base image that is minimal in size and satisfies your requirements. Many images come with the complete OS and avoid using those. There is a very high possibility that many of the libraries have vulnerabilities, which in turn make your image vulnerable. Selecting a small image with the latest patches limits the attack surface.

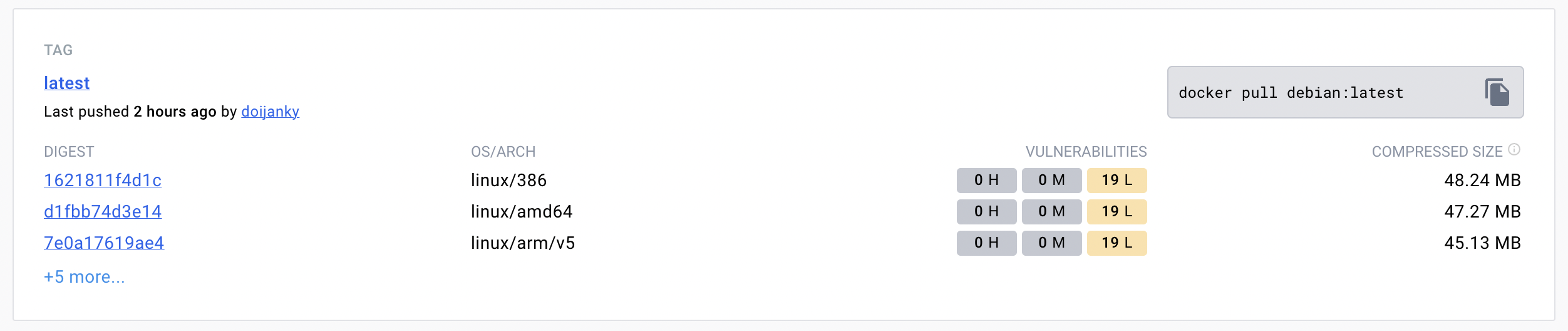

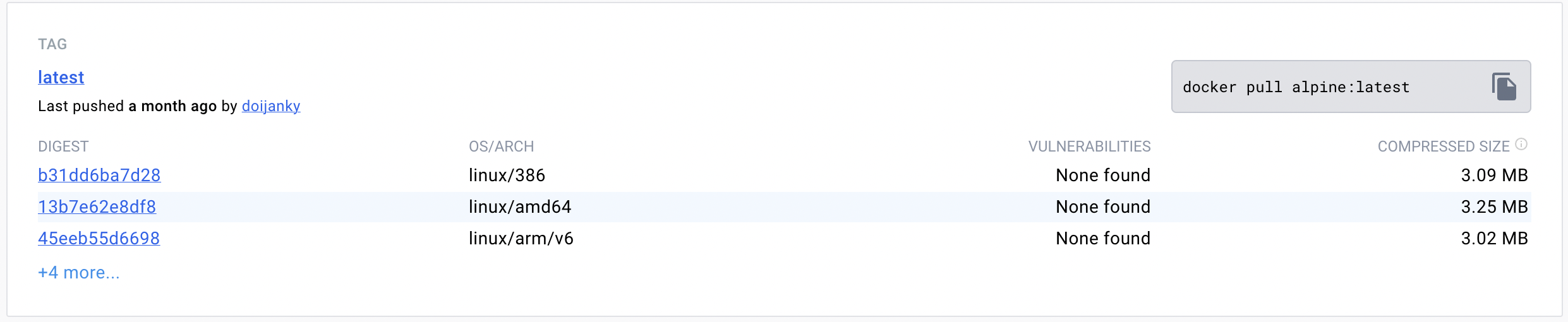

For instance, let’s compare Debian and Alpine images for Linux.

Alpine being the smallest Linux packaging is 10x smaller than the Debian Linux. In terms of vulnerability, alpine is less vulnerable than Debian. While comparing vulnerabilities give importance to High and Medium vulnerabilities.

Use a specific image tag or version

When creating a Docker image, use a specific tag or version instead of "latest" to ensure traceability of the exact image being used. This makes troubleshooting easier when dealing with running containers. If the program running inside the container depends on the base image libraries or in case of configuration change, the application may crash. so it's better to use with version rather than latest

FROM nginx:1.24.0-perlRemove unnecessary packages/software from the image

In most cases, base images generally contain system/external libraries that are either vulnerable or unwanted. So To reduce attack surface, use a minimal base image that includes only the essential components needed for your application. Popular choices include Alpine Linux

And later we can add only the packages which is essential for our application. similarly, if we find a system library vulnerable we can remove it as well.

An example is shown below

RUN apk --no-cache add package-aRUN apk del package-bUse the Least privileged user for running a container

root is the default user inside a Docker container. Running a docker container with root privilege is a big risk. If you don’t specify a USER in Dockerfile it defaults to root user. suppose an attacker compromises the container and gets into it, he can run things as the root user and have complete privilege over the host.

RUN groupadd -g 10001 appuser RUN useradd -u 10000 -g appuser appuser RUN chown -R appuser:appuser /<working-directory>USER appuser:appuserBy creating a dedicated user and group and setting appropriate ownership on the working directory, these Dockerfile instructions contribute to improving the security and isolation of the application running inside the Docker container.

Sign and verify images

Container image signing refers to the process of adding a digital signature to a container image. The purpose of this signature is to ensure that the image is authentic and has not been tampered with or modified in any way. By verifying the signature, you can confirm that the image you are using is the same one that was originally signed.

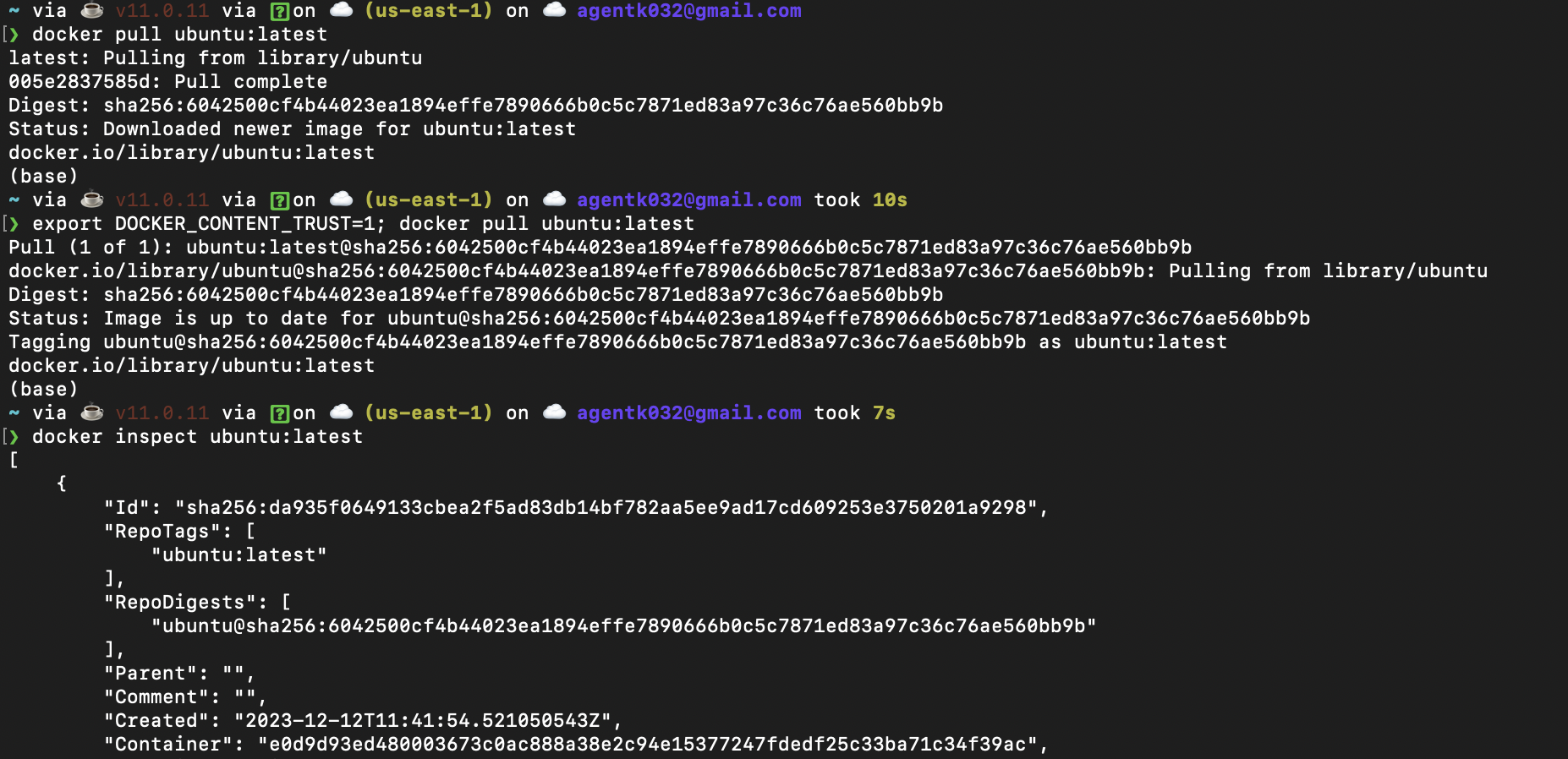

Docker has something called Docker container trust (DCT) to validate signatures. Content trust is disabled by default in the Docker Client so that users can pull non-signed images. To enable it, set the DOCKER_CONTENT_TRUST environment variable to 1

Let’s try pulling an image

Docker pull will show the repo digest alone But with Docker trust enabled you can see it verifies the signature. You can also use Docker inspect to check the integrity of the image by comparing the digest.

Don’t Leak Confidential data to Docker Images

Building a Container image often involves installing packages or downloading code from external/internal repositories and if you’re installing private code you often need to gain access with a secret: a password or a private key or token.

It's important to ensure that any sensitive data or information is not included in the final image of a project. If this information is present in the image, anyone with access to it can easily extract it, potentially causing serious harm.

By storing secrets in the dockerfile, we are giving unauthorized individuals the ability to access our private repository, which can be a major security risk.

Docker has features such as environment variables, and build args to avoid these features.

# Stage 1: Build the Go applicationFROM golang:1.20 AS builderWORKDIR /backendARG BITBUCKET_APP_PASSWORDARG BITBUCKET_USERNAMEARG DEPLOYMENT_VERSION=developmentRUN git config --global url."<https://${BITBUCKET_USERNAME}:${BITBUCKET_APP_PASSWORD}@bitbucket.org/>".insteadOf "<https://bitbucket.org/>"here as you can see git credentials are being configured using build args in docker.

We can pass these using environment variables as well. Never copy .env or such sensitive files into an image add those in .dockerignore file. During the deployment state, you can mount those as Kubernetes secrets like in Kubernetes.

Use Multi-Stage Builds

With multi-stage builds, you use multiple FROM statements in your Dockerfile. Each FROM instruction can use a different base, and each of them begins a new stage of the build. You can selectively copy artifacts from one stage to another, leaving behind everything you don't want in the final image.

By using the multi-stage builds you have multiple benefits,

- Reduces image size: Normally in most languages artifacts are needed only during the build phase. if we can separate the build and run phase as two stages. the final image will be lower in size and it will contain only what an application needs to run

- Security: Packages and dependencies need to be kept up to date because they can be a potential source of vulnerability for attackers to exploit. Therefore, you should only keep the required dependencies. Using Docker multi-stage build means the resulting container will be more secure because your final image includes only what it needs to run the application.

# Build stageFROM ubuntu AS builder# ... build steps ...# Final stageFROM alpine:latestCOPY --from=builder /app /appMulti-stage build can reduce the attack surface by removing vulnerable dependencies.

Use COPY instead of ADD in Dockerfile

Docker provides two commands for copying files from the host to the Docker image when building it: COPY and ADD. The instructions are similar but differ in their functionality

COPY command copies files from local machines and the locations should be specified explicitly while the ADD command copies local files recursively, implicitly creates the destination directory when it doesn’t exist, and accepts archives as local or remote URLs as its source, which it expands or downloads respectively into the destination directory.

As the ADD command can accept files from remote URLs, there is a chance for Man in the Man-in-the-Middle attack. ADD command extracts zip files into a directory and it’s an attack surface for zip bombs.

Do Not Expose Unwanted Ports

The Dockerfile defines which ports will be opened by default on a running container. Only the ports that are needed and relevant to the application should be open. Look for the EXPOSE instruction to determine if there is access to the Dockerfile.

ports like 22 are used for SSH and exposing these increases the attacking surface.

Scan Your Images

Scanning your images after each build is necessary as you know vulnerabilities are increasing day by day. So it's important to check the dependencies we are using in our project.

We have multiple tools to do the container image scanning such as

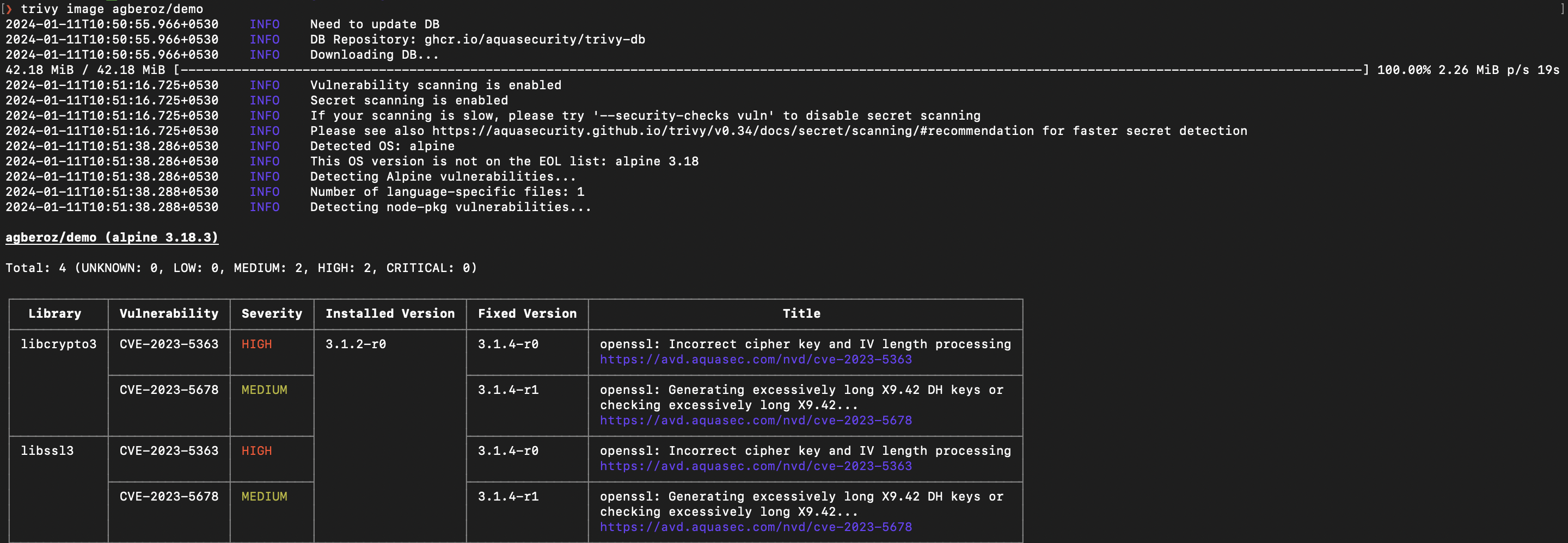

For instance, we can try scanning instances using Trivy for an image.

As you can see trivy can detect vulnerabilities in the packages used inside the container. If there are severe vulnerabilities consider switching images to a much safer one. Regularly checking vulnerabilities or including such tools in build pipelines is a good practice.

These are some good practices we need to follow to build secure container images. However, this does not solve the container security issues. Taking steps to harden your build environment is critical to maintaining good security for your containers, applications, and host machine. In this guide, we’ve covered some key steps you can take to create safer images and implement container security at build time.